What Is Web Scraping? A Complete Guide for Business and Cybersecurity Leaders

Updated on November 5, 2025, by Xcitium

Have you ever wondered how companies gather massive amounts of data from websites — everything from prices to public records — in seconds? The answer lies in web scraping, a technique that automates data extraction from the internet.

But while web scraping offers powerful business insights, it also raises serious questions about privacy, cybersecurity, and ethical use.

This guide explains what web scraping is, how it works, the tools involved, its legitimate applications, and the security measures organizations must take to protect their data and reputation.

What Is Web Scraping?

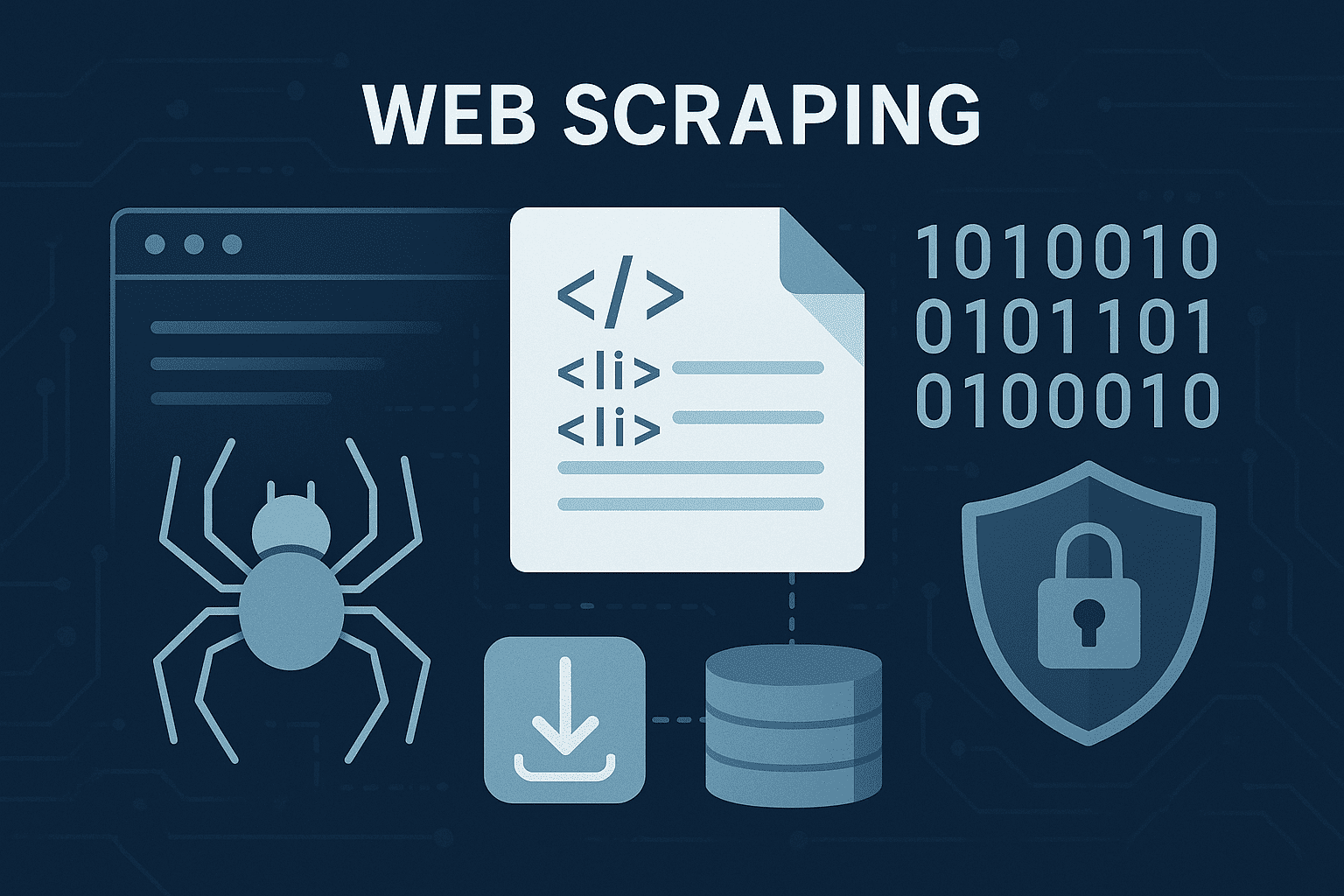

Web scraping is the automated process of extracting information from websites using scripts, bots, or specialized software.

Instead of manually copying data from web pages, web scraping tools crawl through websites, gather structured information, and export it into formats such as CSV, Excel, JSON, or XML.

For example, a company might use web scraping to:

-

Track competitor prices.

-

Monitor product reviews or sentiment.

-

Collect financial data for analysis.

-

Extract cybersecurity threat intelligence.

How Web Scraping Works

At its core, web scraping involves four main steps:

-

Sending an HTTP Request:

The scraper sends a request to the target website’s server, just like your browser does when you visit a webpage. -

Downloading the HTML Code:

The server responds with HTML data — the same code that browsers use to display content. -

Parsing the Data:

The scraper analyzes (or “parses”) the HTML structure to locate specific data — such as product names, prices, or links. -

Storing the Data:

The extracted data is cleaned, organized, and exported to a usable format (e.g., Excel, database, or API).

Here’s a simple Python example:

This script scrapes product titles from a webpage — showcasing how easy and accessible web scraping can be.

Common Web Scraping Tools

Businesses and data analysts use various web scraping tools, both open-source and commercial. Popular options include:

-

BeautifulSoup (Python): Simple, fast, and ideal for beginners.

-

Scrapy: A powerful Python framework for large-scale scraping projects.

-

Selenium: Used for scraping dynamic websites that rely on JavaScript.

-

Octoparse: A user-friendly, no-code tool for structured data extraction.

-

ParseHub: Supports interactive websites and API-based scraping.

These tools differ in complexity and capability, but all share the same goal — turning unstructured web content into structured data.

Types of Web Scraping

Not all scraping is the same. Different methods serve different needs:

1. HTML Parsing

Extracts specific HTML elements from a page using tag identification and pattern matching.

2. DOM Parsing

Manipulates the Document Object Model (DOM) to access data dynamically.

3. Web APIs

Many sites offer Application Programming Interfaces (APIs) that provide structured access to data — the most secure and legal method.

4. Headless Browsers

Tools like Selenium simulate a browser environment to scrape data from JavaScript-heavy pages.

Legitimate Uses of Web Scraping

Despite its association with gray areas, web scraping has many legitimate business applications:

-

Market Research:

Businesses scrape competitor prices, customer feedback, and industry trends. -

Data Aggregation:

Platforms like job boards or travel sites use scraping to compile listings from multiple sources. -

Financial Analytics:

Investors collect stock data, filings, and economic indicators from public sources. -

Cyber Threat Intelligence (CTI):

Security teams use web scraping to monitor dark web marketplaces, forums, or leak databases. -

SEO and Digital Marketing:

Marketers analyze keywords, backlinks, and search engine rankings. -

Academic Research:

Universities and data scientists extract public data for machine learning and policy studies.

The Risks and Ethical Concerns of Web Scraping

While web scraping offers valuable insights, it also poses legal, ethical, and cybersecurity risks when done improperly.

1. Legal Violations

Many websites prohibit scraping in their Terms of Service. Unauthorized scraping can lead to lawsuits, as seen in hiQ Labs vs. LinkedIn.

2. Intellectual Property (IP) Theft

Copying proprietary data, images, or content without permission may violate copyright laws.

3. Data Privacy Breaches

Scraping personal or sensitive data violates GDPR, CCPA, and other privacy regulations.

4. Server Overload

Aggressive scraping can flood a website with requests, causing denial-of-service (DoS) issues.

5. Security Threats

Malicious scrapers can be used for phishing, price manipulation, or data exfiltration from unsecured websites.

Cybersecurity Challenges in Web Scraping

Web scraping doesn’t just affect businesses doing the scraping — it can expose vulnerabilities for those being scraped.

1. Bot Attacks

Scrapers can act as bots, bypassing rate limits, and harvesting data at scale. This can lead to data leakage or website downtime.

2. API Exploitation

Attackers often target unsecured APIs that return large volumes of sensitive information.

3. Credential Harvesting

Some scrapers extract login forms or session tokens — an entry point for credential stuffing attacks.

4. Content Manipulation

Competitors or bad actors may use scraped data to clone or spoof websites.

How to Protect Your Website from Malicious Web Scraping

Organizations must take proactive steps to safeguard their digital assets:

1. Use CAPTCHA

Prevent bots by requiring human verification during sensitive requests.

2. Rate Limiting

Set limits on the number of requests a user or IP can make per second.

3. IP Blocking and Monitoring

Detect suspicious traffic patterns and block scraper IPs.

4. Obfuscate Data

Hide or dynamically load sensitive data using JavaScript rendering.

5. Deploy Bot Management Solutions

Modern tools like Xcitium Endpoint Security can detect and block malicious bots using behavioral analytics.

6. Secure Your APIs

Apply authentication, authorization, and data filtering to all APIs.

Ethical Web Scraping Best Practices

If you plan to use web scraping for legitimate business insights, follow these ethical guidelines:

-

✅ Respect Robots.txt: Check a website’s

robots.txtfile to see which pages allow scraping. -

✅ Avoid Overloading Servers: Set request delays to prevent site crashes.

-

✅ Scrape Only Public Data: Never extract private or sensitive information.

-

✅ Attribute Sources: Give credit where data is used publicly.

-

✅ Comply with Legal Frameworks: Understand data protection and copyright laws in your region.

By combining ethics with efficiency, businesses can gather insights without crossing compliance lines.

Web Scraping and Cybersecurity Intelligence

In cybersecurity, web scraping has become a strategic intelligence tool for detecting and responding to threats.

Applications Include:

-

Dark Web Monitoring: Scraping dark web marketplaces for leaked credentials or malware listings.

-

Phishing Detection: Identifying fake websites or cloned domains.

-

Vulnerability Tracking: Collecting CVE (Common Vulnerabilities and Exposures) data.

-

Threat Actor Profiling: Monitoring hacker forums and communication patterns.

This proactive data collection helps organizations predict and prevent cyber incidents before they escalate.

Legal Frameworks Governing Web Scraping

Different jurisdictions regulate web scraping differently. Some key laws to understand include:

| Law | Region | Implication for Web Scraping |

|---|---|---|

| GDPR | EU | Prohibits scraping personal data without consent. |

| CCPA | California, USA | Protects consumers’ personal data from unauthorized use. |

| CFAA | USA | Criminalizes unauthorized access to computer systems. |

| DMCA | USA | Protects copyrighted content from being duplicated. |

Before deploying large-scale scrapers, businesses should always consult legal experts to ensure compliance.

Web Scraping vs. Web Crawling

These two terms are often used interchangeably — but they serve different purposes.

| Feature | Web Scraping | Web Crawling |

|---|---|---|

| Purpose | Extract data | Discover web pages |

| Focus | Specific information | Indexing entire sites |

| Example Tool | BeautifulSoup, Scrapy | Googlebot, AhrefsBot |

| Output | Structured datasets | Search engine indexes |

In simple terms, web crawling finds data, while web scraping extracts it.

Future of Web Scraping

As data continues to drive business decisions, web scraping will evolve alongside AI and automation.

Emerging Trends:

-

AI-Powered Scraping:

Intelligent bots that adapt to anti-scraping defenses. -

Data-as-a-Service (DaaS):

Companies offering pre-scraped, compliant datasets. -

Real-Time Intelligence:

Live data extraction for financial, cybersecurity, or IoT monitoring. -

Enhanced Security Protocols:

Integrating web scraping detection with Zero Trust security models.

In 2025 and beyond, ethical, compliant, and secure scraping will be the new standard for businesses leveraging web data responsibly.

Conclusion: Balancing Insight and Integrity

So, what is web scraping in today’s digital world? It’s a powerful tool that enables businesses to extract valuable insights — but it must be used with care, compliance, and cybersecurity awareness.

When used ethically, web scraping empowers smarter decisions, deeper market understanding, and proactive cyber defense. When abused, it can lead to legal trouble and data breaches.

👉 Want to protect your digital assets from unauthorized data scraping?

Request a Free Demo from Xcitium — and discover how enterprise-grade threat intelligence and endpoint protection can defend your business in real time.

FAQs About Web Scraping

1. Is web scraping legal?

Yes — if done on publicly available data and within website terms of service. Scraping personal or copyrighted data without consent is illegal.

2. What is the difference between web scraping and data mining?

Web scraping collects data, while data mining analyzes it to find patterns or insights.

3. Can web scraping harm my website?

Yes. Aggressive scraping can overload servers or expose sensitive data if defenses are weak.

4. What’s the safest way to scrape data?

Use official APIs, respect robots.txt, and follow ethical and legal standards.

5. How can businesses protect themselves from scrapers?

Use firewalls, CAPTCHA systems, behavioral bot detection, and cybersecurity tools like Xcitium Endpoint Protection.